Welcome once again, and thanks for clickin on my journal! 🙂

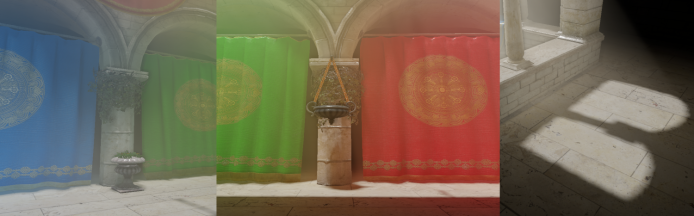

When we think of light propagation volumes we think of awesomeness with a ton of light bleeding, right? Well I do, so I’ve been trying to limit the amount of light bleeding and I find the current result acceptable without being too hackyish.

The first change is the injection. Usually I would inject the lighting in the same position as the voxels into a sh lighting map, but, once I do this I don’t have much information on which “direction” the lighting came from if I was to propagate the lighting. In that case I would have three choices, light bleeding, expensive occlusion calculation or not propagating (But the last one wouldn’t be any fun…). So, what if instead of injecting the lighting at the voxel position I inject it with a small offset, this offset would then be proportional to the approximated normal. In this way the actual lighting information would be injected into the empty space.

The 2nd change I made was simply discarding propagation in any occluded voxels/cells as the new method doesn’t require it. Now the issue with this is if the cell size ( How much a voxel/cell occupies in whatever unit your system uses ) is way too big compared to the world the propagation will visually fail and look horrible, so to look good a bit of performance is sucked.

The last change is when sampling the final indirect gi I apply a small offset as at all the lighting information is in the “empty” cells, now one might say that this is a crude approximation but I don’t find it that horrible.

So, there you have it, that’s my current recipe to a LPV system without bleeding, there are still lots of things to fix but it’s a start.

In my last entry I talked about a cascaded LPV system, however this has slightly changed. You can still configure multiple cascades; however the way it works is slightly different. In each cascade the system will create two grids, a high frequency grid and a low frequency grid (The dimensions of the grid is still intact). The low frequency grid represents the low frequency lighting information, and the high frequency grid will represent the slightly higher frequency lighting information. The two grids are treated as separate grids with different cell sizes but when rendered the energy proportion is taken into account.

So I’m fairly happy how my LPV system has progressed and I find the results acceptable, now obviously there’s the issue with the “blocky” look ( If you want an acceptable performance 🙂 ), which I’ll try and mess around with and share my results later on.

Now, let’s steer slightly away from that and think about volumetric fog! Yes! That’s right!

Volumetric Lighting!

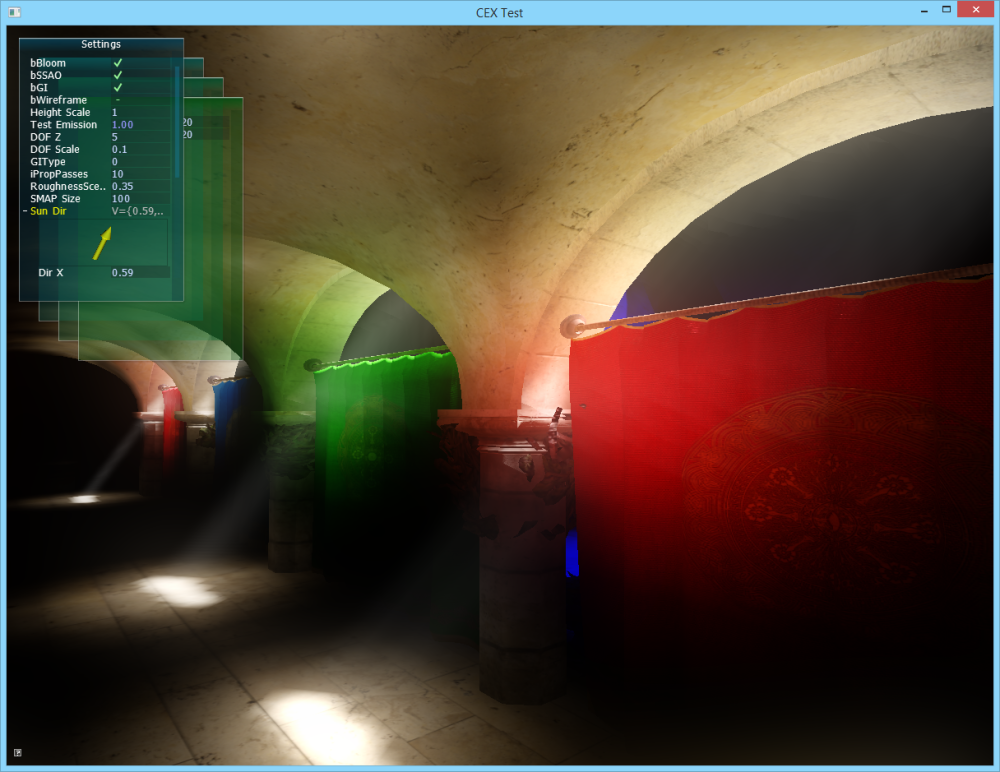

So to make the volumetric lighting feel more “part” of the scene I integrated the indirect gi system. Currently I have a very basic volumetric lighting setup, raymarch from the camera to the world space position at the pixel and slowly accumulate the lighting (The method I used to calculate the lighting is based on “Lords of the Fallen’s” [Game] Volumetric Lighting). So each raymarch I also sample the indirect gi from the propagated lighting map and multiply that in. And I’m really liking the results!

(I know the roughness / specular looks wrong, I still need to integrate the rougness / specular maps from the sponza scene) (And I seriously improved the quality of the gifs…)

Now! The only issue with this is… performance! All of that added together is asking your hardware to commit suicide, at least, mine did. Since I’m an addict to the game Dota 2, I was having a casual game with some friends and decided to program in the background, now for some reason I was writing and reading from an unbound UAV in my compute shader ( I didn’t realize this ). The result was the gpu completely freezing ( I could still talk and hear my friends, whilst freaking out ), I waited for the tdr duration however the tdr did not occur. So in the end I had to force shut down and restart quickly in order to participate in the game ( We won though! ). I was actually scared to start it again even though I bound the uav…

Looking aside from that I’ve also implemented some basic debugging tools for the lpv system, such as getting the lighting information from each cell position ( It’s extremely simple to implement, but really helps a lot ):

Previously my engine has a pretty horrible architecture, because I’m horrible at architecture, I’m a horrible person. So I decided to attempt at improving the architecture of the engine. I decided to split the engine up in:

- Helpers : Just general things, common math stuff / etc…

- Native Modules : Shaders, Containers, etc

- User Modules : An example would be a custom voxel filler or whatever, depends on the type

- Chains : Responsible for higher level actions, such as Shadow Mapping, Voxel GI, etc…

- Device : Basically combining chains and working with them

Now I’m not saying that this is ideal or even good, but I find it nice and functional. Now the user modules are a bit special, the user modules are custom modules that the programmer can create. However each module has to derive from a module type. An example is the gi system, the gi system has a special module type that allows the modification of the lighting maps before the propagation. The programmer would then inherit from this type and override the pure virtual functions, and then push this module to a queue. I made a small module that would “approximate” the indirect radiance from the “sky” (Assuming that there is one) just to test around. The native c++ code is farily straight forward. Although this specific module type has a bunch of predefinitions and preprocessors in a shader file to ease the process, the shader code for this testing module:

#include "module_gridshfill.hlsl" // Our basic module definition MODULE((8, 1, 8), (uint3 CellPos : MODULE_CELLID) { // Testing data, This is just magic and stuff, not correct at all float fFactor = 0.01f; float3 f3Color = float3(0.658, 0.892, 1); float3x4 f3x4AmbientSH = { fFactor.xxxx * f3Color.x, fFactor.xxxx * f3Color.y, fFactor.xxxx * f3Color.z }; // Raymarch Down [loop] for (CellPos.y = g_fVoxelGridSize-1; CellPos.y >= 0; CellPos.y--) { // Get the voxel VoxelData voxel = FETCH(CellPos - uint3(0, 1, 0)); // If this voxel is occupied break the march // TODO: Semi occluded voxels (1<w>0) if (voxel.fOcclusion > 0) { break; } // Write the new value on top of the current value WRITE_ADDITION(CellPos, f3x4AmbientSH); } });

Now some of the stuff will change for sure although it works fine for now. The result of the above is an indirect radiation from the “sky”. And it looks alright! So I’m pretty happy with the module system.

In the complete other hand I suddenly have this weird crave to work on my scripting language again… (I know I know, just use an existing one… But where would the fun be in that!? 🙂 ) And I soon need to reimplement some sort of physics engine into this version of my engine. So, there’s still lots of fun!

Looking away from some more or less small changes and additions, that’s more or less it folks! It’s been a heavy week though, lots of things happening. Fx my dog found out that a full day barbecue party is extremely tiring, he didn’t want to walk or anything, slept like a stone… (He loves walks).

See you next time!